As the newsletter has begun to grow and expand, I am noticing more and more common themes in how AI is being regarded by regulators.

AI is now clearly embedded in everyday legal workflows, sometimes explicitly and sometimes via “helpful” features inside existing software. Governance is now a professional risk issue, not a tech hobby.

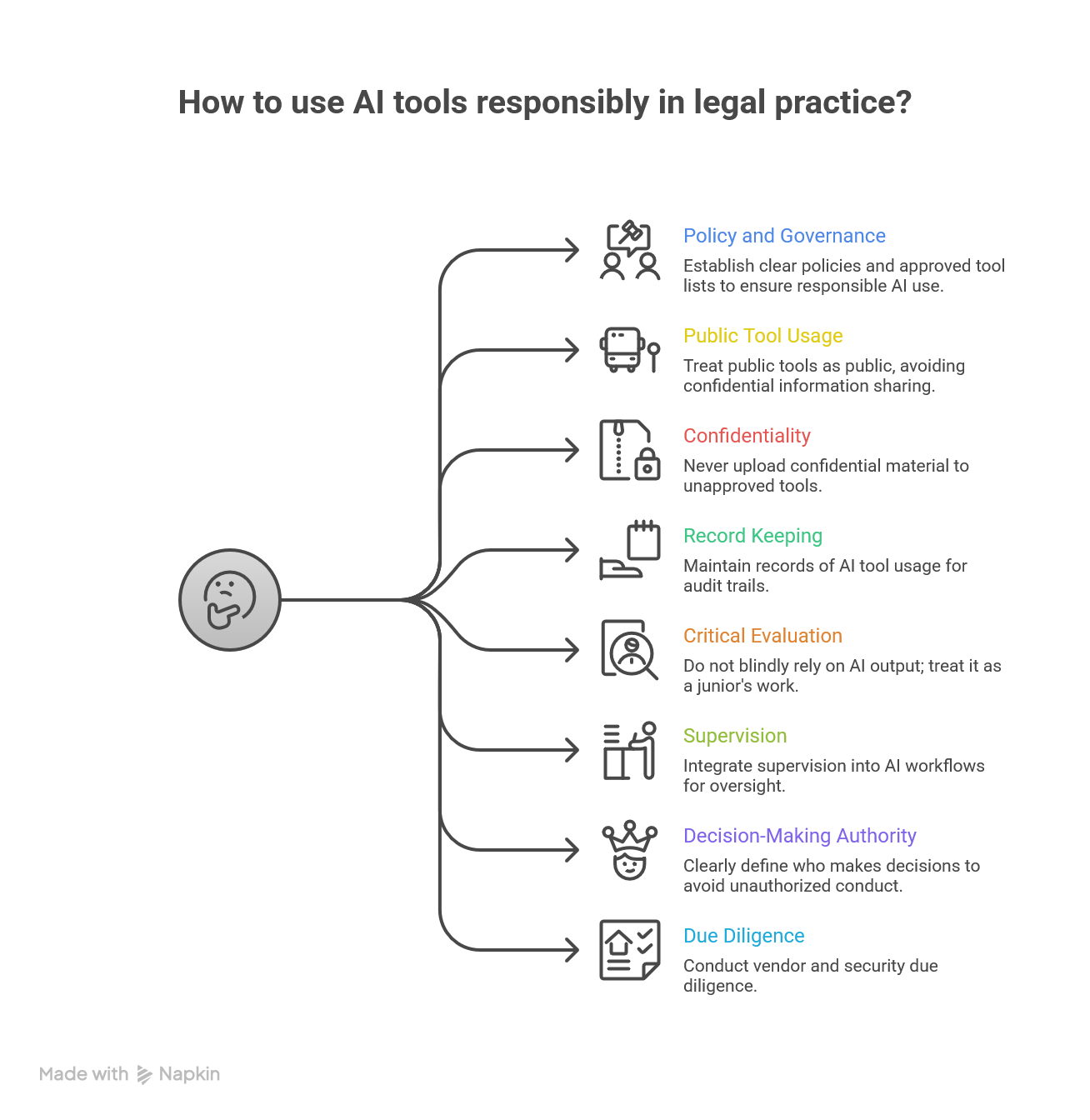

In my view all practitioners should be mindful of key rules before using AI tools.

This post is intended to serve as a one stop shop for these rules.

The rules are summarised before the ad break, and more detail on each can be found after.

In short, AI can support legal work, but it does not dilute your professional duties.

Update History

This article was first published in December 2025, with 8 rules.

Future revisions to this article will be reflected in this section for ease of updating.

The Golden Rules

Have a clear policy and an approved tool list - policies and governance is going to be key.

Treat public tools as public - never put anything onto a public tool that you wouldn’t say out loud to a stranger on the bus.

Never upload confidential or privileged material to an unapproved tool - this goes hand in hand with 2. above.

Keep records of how AI tools have been used - audit trails are going to be key to show how you used your professional judgment.

Never, ever, blindly rely upon on an output - treat AI output as a keen but inexperienced and unreliable junior.

Bake supervision into the AI workflow - this again stresses the governance and oversight that must be in place.

Establish who is making the decisions - we do not want a Mazur situation where your use of an AI tool risks unauthorised conduct of litigation.

Conduct vendor and security due diligence - as you would for any provider of key firm services.

Produced with Napkin AI, a useful tool (as long as you adhere to these rules!)

Ad Break

In order to help cover the running costs of this newsletter, please check out the advert below. In line with my promises from the start, adverts will always be declared and actual products that I have tried, with some brief thoughts from me.

Although I am trying to incorporate good prompts into the weekly newsletter, I understand some of you are keen for more. Hubspot have some excellent broad use ChatGPT prompts to check out.

Want to get the most out of ChatGPT?

ChatGPT is a superpower if you know how to use it correctly.

Discover how HubSpot's guide to AI can elevate both your productivity and creativity to get more things done.

Learn to automate tasks, enhance decision-making, and foster innovation with the power of AI.

The Rules - in detail

1) Have a clear policy and an approved tool list

Write down what is allowed, what is not, and who signs off new tools. Keep it short enough that fee earners actually use it.

What to include:

Approved tools, with permitted uses and data types.

Prohibited uses, including for court documents unless certain checking standards are met.

Who owns decisions on new tools: typically COLP plus IT and information governance, with partner level oversight for selection and ongoing use.

Why it matters: most “AI incidents” start off as well intentioned improvisation, particularly when people are under time pressure. Following this rule will ensure that staff are trained on risks and there is accountability.

2) Treat public tools as public tools

“No ungoverned use of public tools” should be explicit, and enforced.

Rule of thumb: if the tool is not approved for client data, do not paste anything from a live file into it. That includes names, dates, unique facts, emails, attachments, medical details, and anything that could identify a matter even if you remove your client’s name.

If you wouldn’t say it out in public in real life, it should not be put into a public tool.

Why it matters: the SRA has already flagged the specific risk of staff using online tools such as ChatGPT in relation to client matters, and the wider confidentiality and privilege risks this poses.

3) Never upload confidential or privileged material unless the tool is approved for it

This must be the hard line. Your policy should make clear that privilege and confidentiality are not “settings” or optional.

Minimum standard before any confidential upload:

Contracted, business grade service with clear terms.

Documented due diligence on storage, retention, access controls, and whether inputs are used to train models.

A data protection view.

Why it matters: confidentiality and privilege risk is a recurring theme in SRA materials. These principles are core to our profession and must not be diluted by convenience.

4) Keep records of material AI use

If AI contributes to work product you must keep an audit trail proportionate to the risk.

A practical approach is to record:

The tool used and version, where known.

The prompt or instruction, or a short summary if it is long.

The output saved to file (or a link to it).

What you verified, and what changes you made.

Who reviewed it and when.

Why it matters: We have seen cases where lawyers have been accused of using AI. Forming a habit of recording how you have used such tools may well save your professional reputation. Record keeping supports supervision, defensibility, and learning. It also helps if you later need to explain how a document was produced.

5) No blind reliance on output, ever

AI is the very keen and helpful, but ulimately unreliable and inexperienced paralegal of the legal world.

We have all had that junior (and many of us probably were that junior!); keen to help but often the output requires more work from you.

Simply put, you cannot just rely on the output of AI. It must be checked, it must be scrutinised.

At minimum, the below must always be checked:

Case citations: verify against primary sources.

Quotations: check against the original text.

Facts: check against the evidence and instructions.

Maths: recalculate independently.

Lists (for example disclosure categories): sanity check for omissions

Why it matters: the High Court has publicly warned about lawyers citing non existent cases generated by AI tools, with potential consequences including contempt in serious cases. Check everything, always.

6) Put supervision into the workflow, not an afterthought

Good, effective supervision needs processes, not reminders.

Examples that work in practice:

“Citations must be checked” sign off step before filing anything

A compulsory label on AI assisted drafts in your case management system.

A requirement that a supervisor reviews prompts and outputs for higher risk tasks (for example witness statements, pleadings, advice notes).

Why it matters: supervision is where informal AI use often collapses. Courts have already framed AI errors as a management issue as much as an individual mistake.

7) Be clear about who is making the decision

AI can assist with options, structure, and drafting but it must not become the decision maker.

This is especially true in litigation. We have seen in Mazur how important the right of a solicitor to conduct litigation is. A solicitor (or other qualifed person) must be the guiding mind behind the litigation. This cannot be outsourced to a junior or unauthorised person. Similarly, it absolutely cannot be outsourced to AI.

The writer is curious how this can possibly sit with new AI only firms (see the newsletter here about Grapple).

Why it matters: you want a clean line between (a) tool assisted preparation and (b) the solicitor’s own decision making. At risk of repeating myself in these rules, this clean line must be recorded on the file.

8) Do basic vendor and security due diligence

This is more of a rule for senior staff but it impacts juniors and, frankly, it can be helpful for juniors to remind the seniors!

Verification of any new tool must be full of due diligence.

For any tool that will touch client work, you should be asking:

Where does data go, and who can access it?

Is data used for training?

How is data deleted?

What audit logs exist?

What happens if the vendor is acquired or fails?

Why it matters: legal AI vendors can be volatile. Continuity, export rights, and exit planning are part of sensible risk management, not paranoia.

How did we do?

Hit reply and tell me what you would like covered in future issues or any feedback. We read every email!

Thanks for reading,

Serhan, UK Legal AI Brief

Disclaimer

Guidance and news only. Not legal advice. Always use AI tools safely and in line with best practice.